Shiji Xin

👋 Hi! I'm Shiji Xin, an algorithm developer at

Applied Materials. My current research interest is making advances in

AI

more accessible, either more

useful or more efficient. If you're interested in collaborating, feel free to reach out!

Social networks I'm on: Email: shijixin dot ai at gmail dot com

My research works:

- Fast Inference for Augmented Large Language Models NeurIPS 2025

- GlobalTomo: A global dataset for physics-ML seismic wavefield modeling and FWI NeurIPS 2025 Datasets & Benchmarks

- Optimal Block Sparse Attention Mask for Faster LLM Inference Course Project for MIT 15.095

- FastAgent: Enhanced Scheduling Strategies for Efficient Tool-Integrated Large Language Model Serving. Compound AI Systems Workshop 2024

- MEWL: Few-shot multimodal word learning with referential uncertainty ICML 2023

- On the Connection between Invariant Learning and Adversarial Training for Out-of-Distribution Generalization AAAI 2023 Oral

Some of my toy projects:

- sts-demo: A speech-to-speech demo using latest text-to-speech model in the OpenAI API, with streaming and emotion generated together with response.

- GGUF_Offload: Running quantized DeepSeek V3/R1 on consumer GPUs with offloading

- FlaxAttention: A Jax implementation of PyTorch's FlexAttention

-

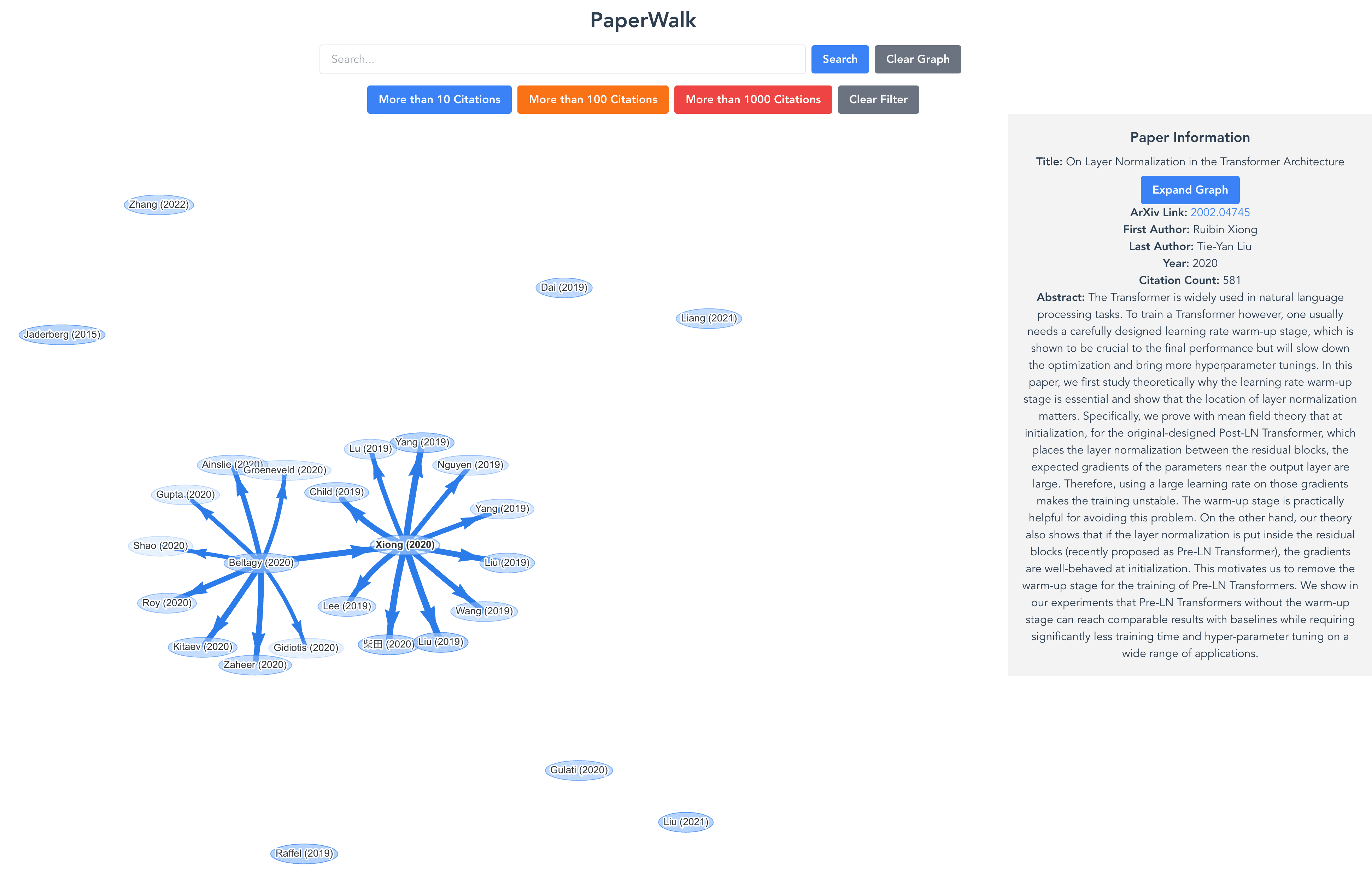

PaperWalk

-

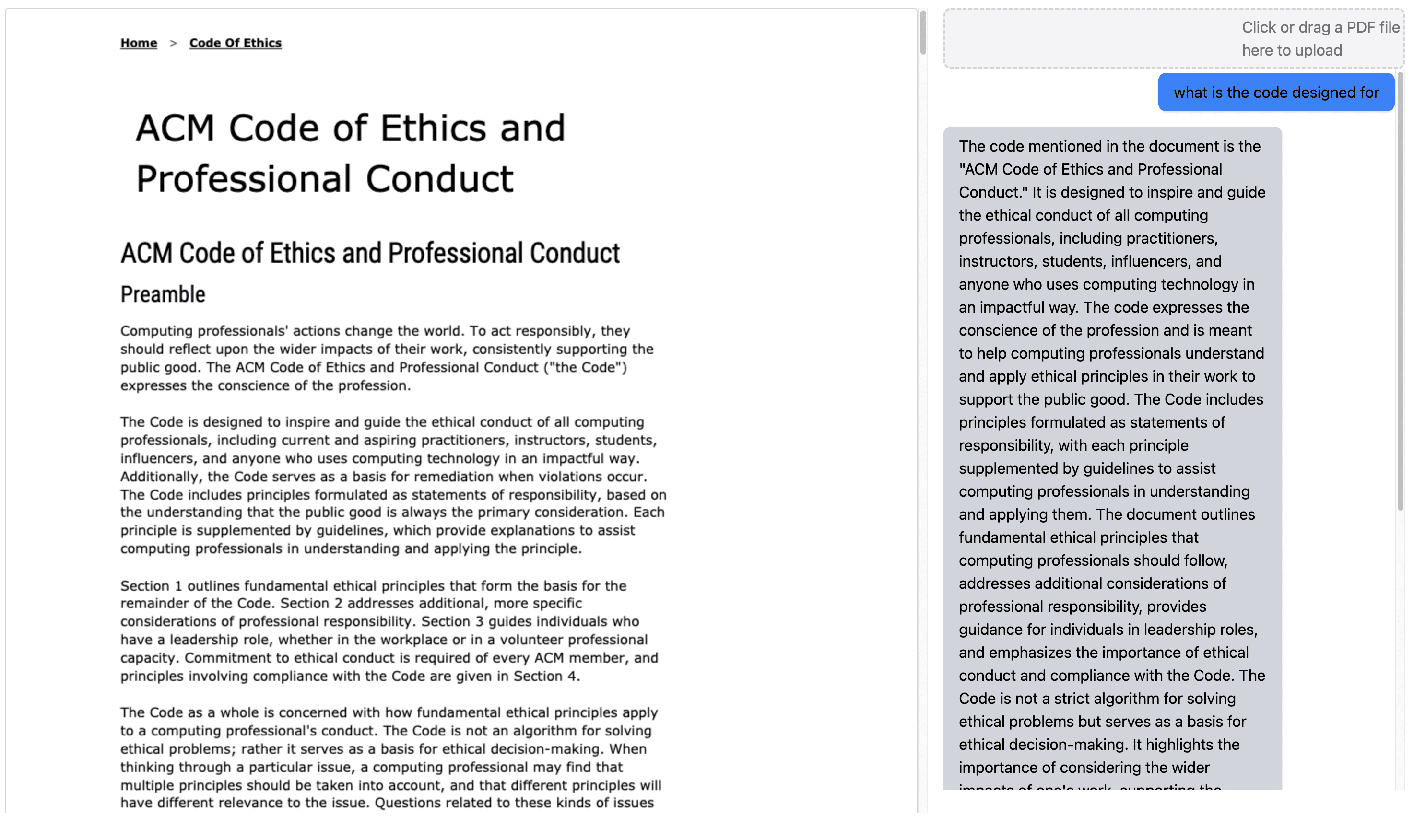

ChatPDF

- PasteBin

Birds I've seen in the US: Bird List